CrowdPlay Features

CrowdPlay has been designed with a number of features to allow easy, rapid collection of large-scale datasets tied into existing RL and offline learning pipelines.

Interfacing with off-the-shelf RL environments

CrowdPlay interfaces with any standard OpenAI Gym and Gym-like environments. Any RL environment that provides a step() and a reset() function can in principle be streamed through CrowdPlay. This enables the entire ecosystem of existing RL simulators for crowdsourcing large-scale human demonstration datasets.

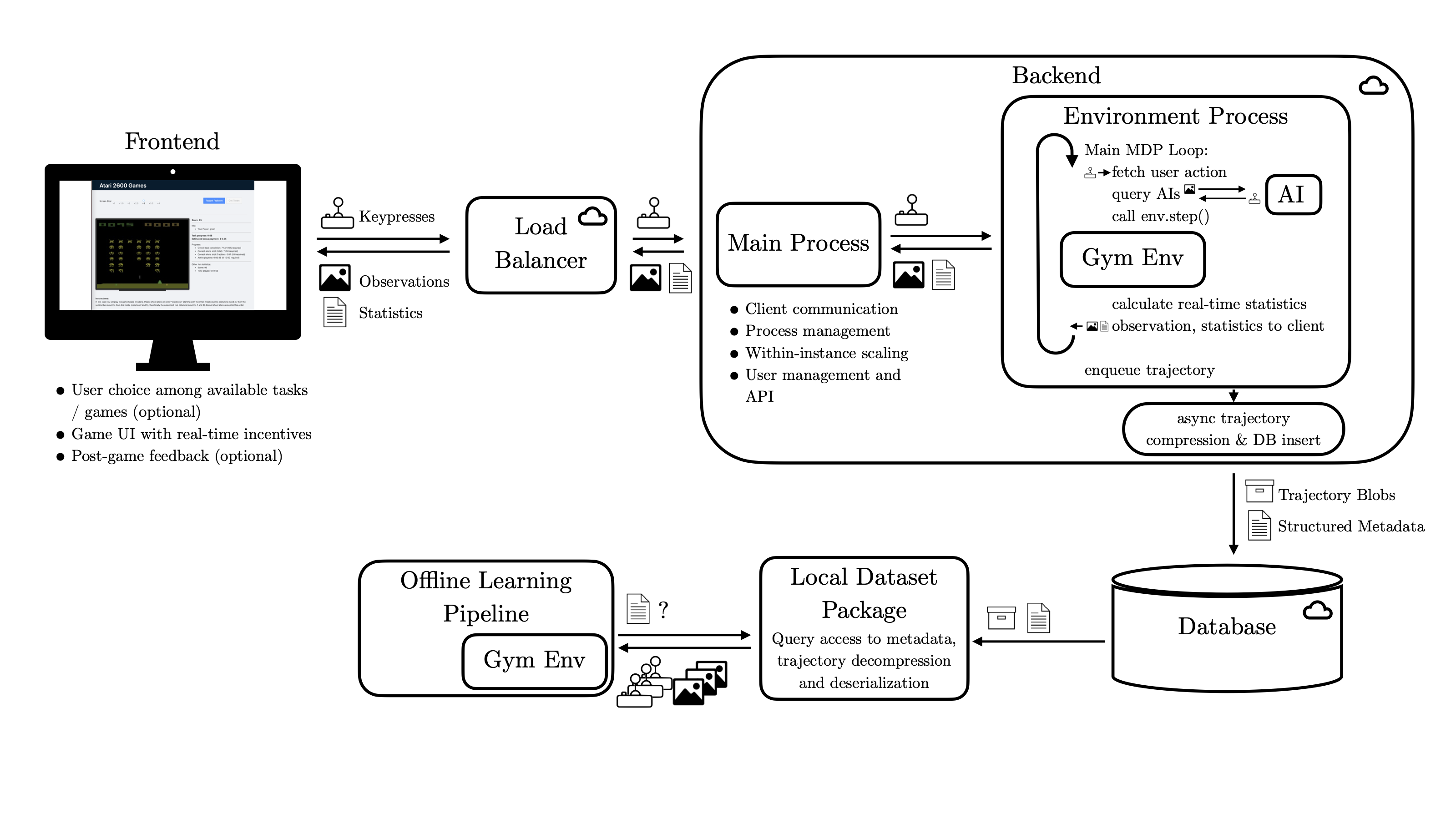

Scalable Architecture

CrowdPlay provides a highly extensible, high performance client-server architecture for streaming RL environments to remote web browser clients. The backend is highly scalable, and supports within-instance and across-instances load balancing.

CrowdPlay provides a highly extensible, high performance client-server architecture for streaming RL environments to remote web browser clients. The backend is highly scalable, and supports within-instance and across-instances load balancing.

Multi-Agent Environments

CrowdPlay supports multi-agent environments, where each agent is controlled by a separate web browser client. Participants interacting in the same environment can be located anywhere in the world. Upon entering the CrowdPlay app, users can be assigned to environments waiting for participants automatically.

Mixed human-AI Environments

In multiagent environments, agents can also be controlled by a mix of human and AI agents. AI agents can be trained using standard RL pipelines such as RLLib. CrowdPlay automatically handles image preprocessing for AI agents, for instance “Deepmind”-style preprocessing for Atari 2600 games.

Rich User Interface

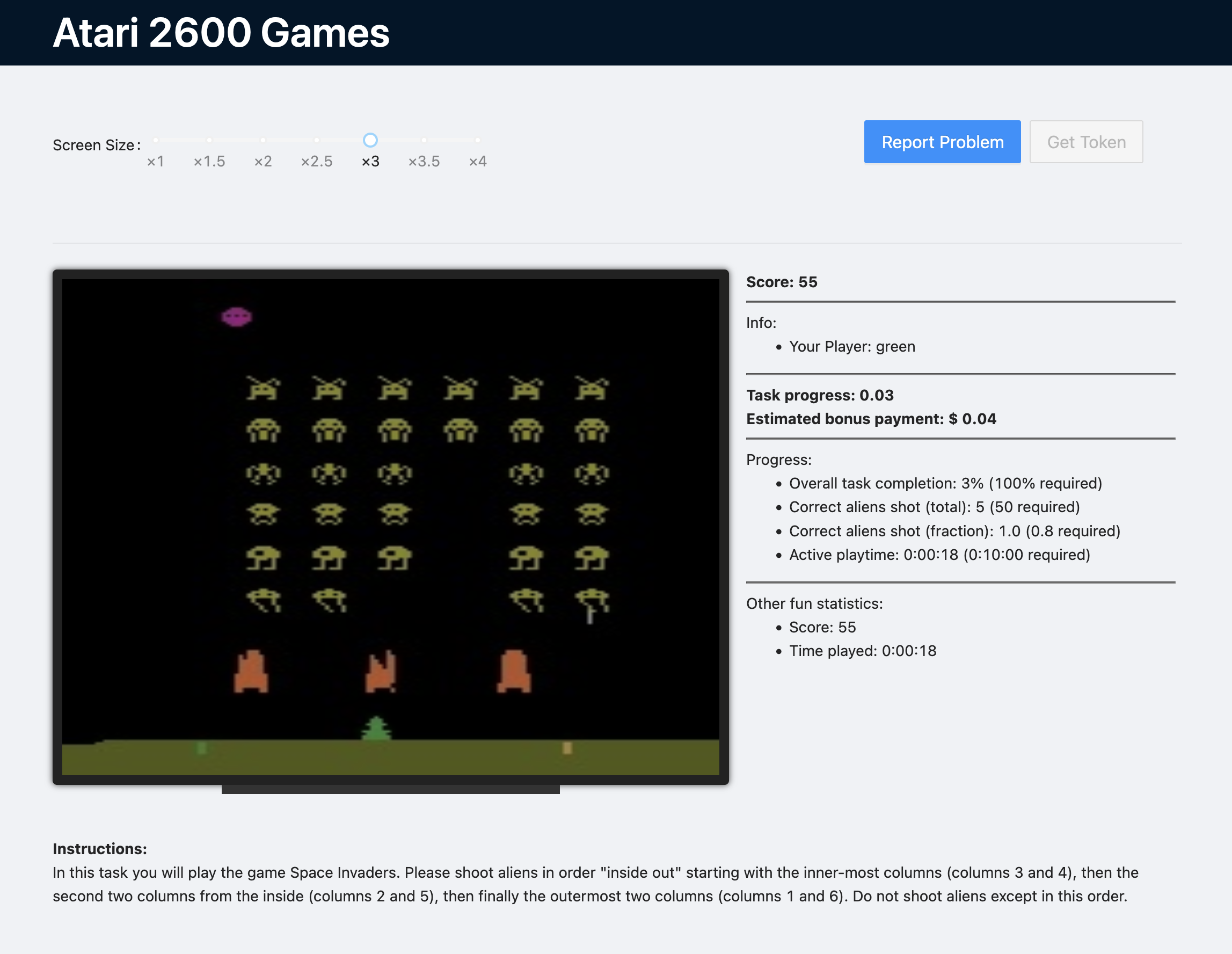

CrowdPlay comes with a rich, modern user interface. It can show detailed instructions as well as real-time feedback to participants. For paid participants, progress and estimated payments can also be displayed in real time.

CrowdPlay comes with a rich, modern user interface. It can show detailed instructions as well as real-time feedback to participants. For paid participants, progress and estimated payments can also be displayed in real time.

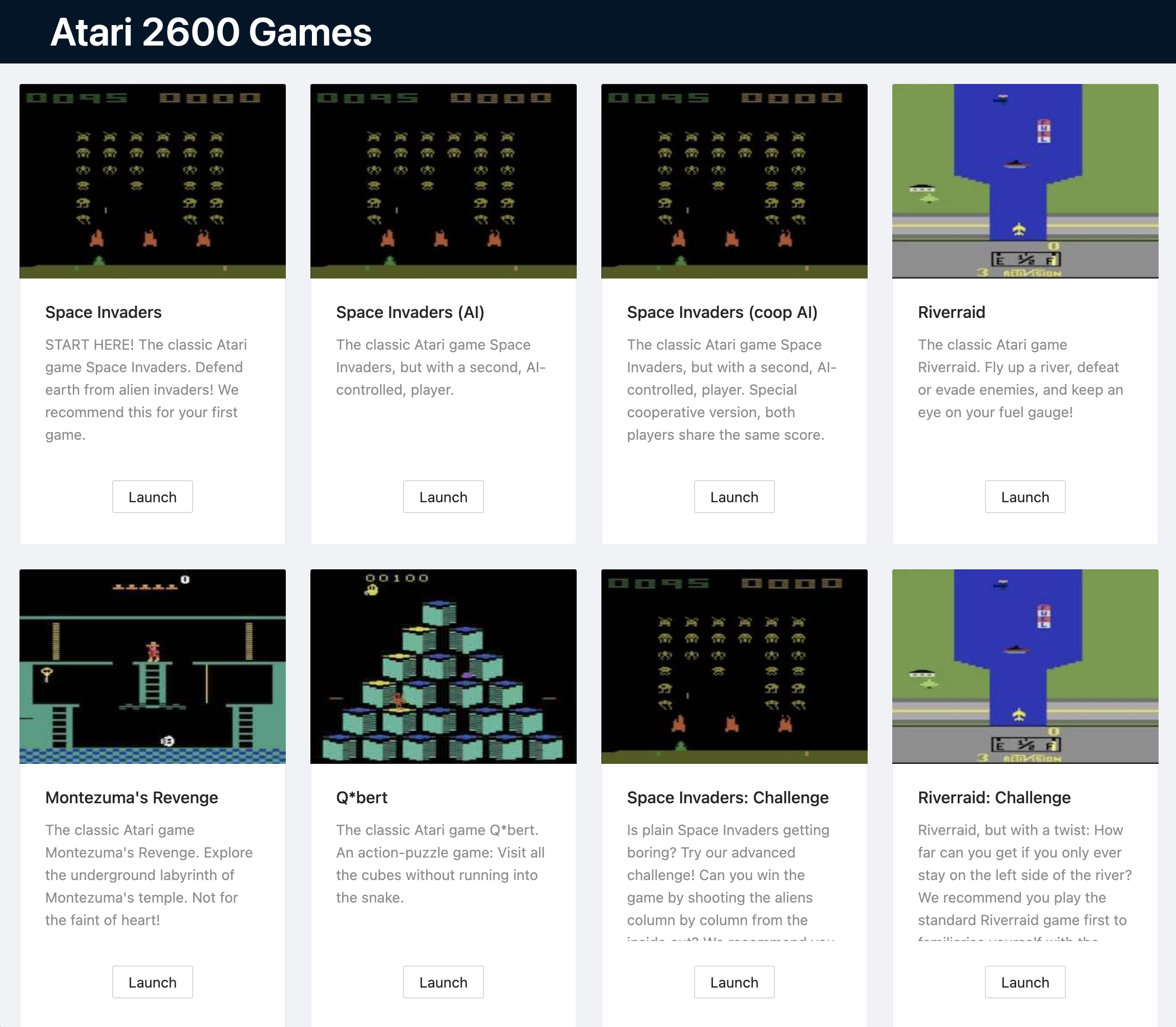

A “game library” style interface can allow participants to choose among available environments.

A “game library” style interface can allow participants to choose among available environments.

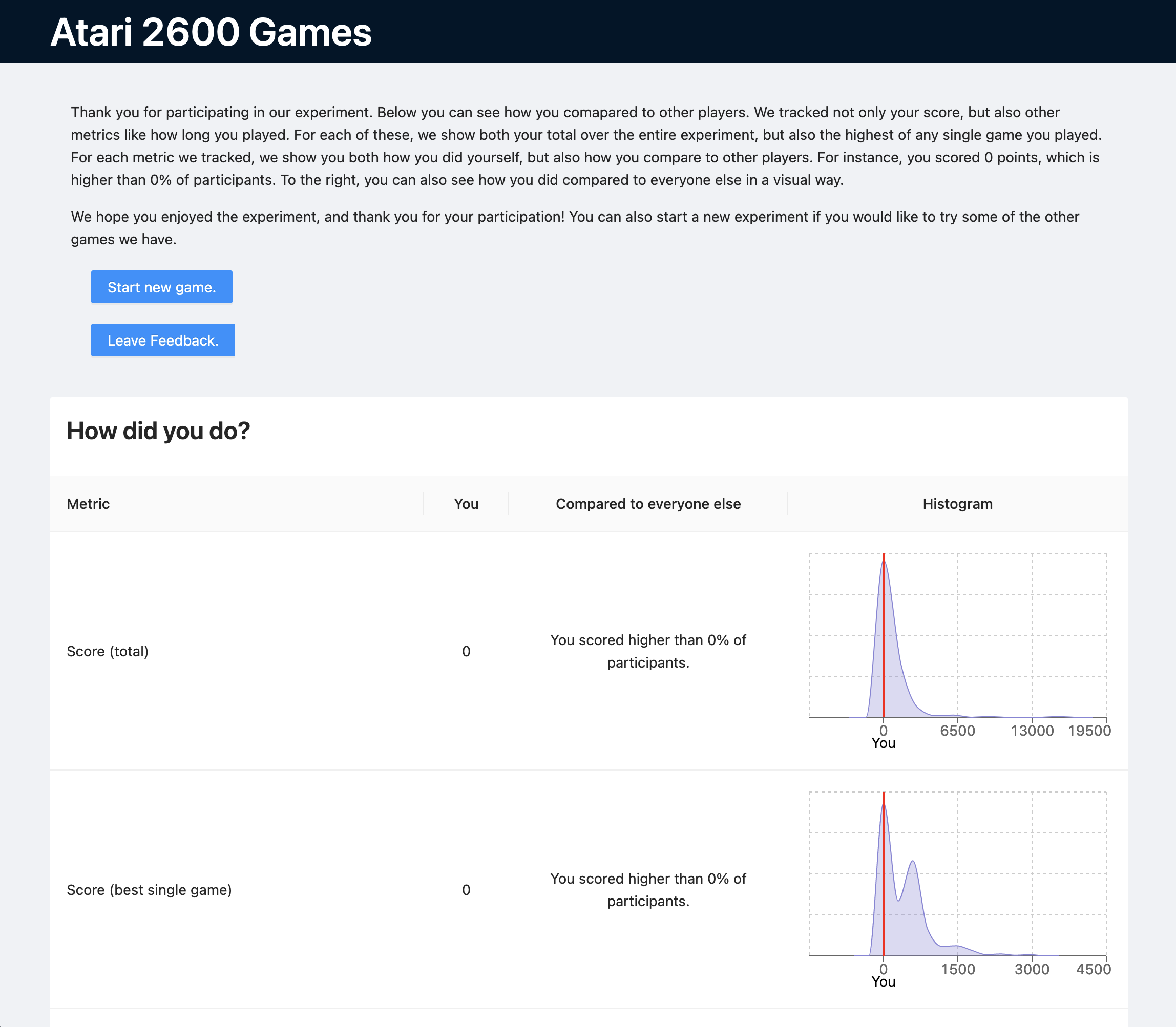

Post-experiments, participants can view their performance relative to other participants.

Post-experiments, participants can view their performance relative to other participants.